CPU & Assembly Design + 3-Register Prime Sieve Class Project — Logisim, Homebrewed Assembly Language

All Projects

By all accounts, CSC 252 shouldn't have been my absolute favorite class in my college career. It was a second-year main track requirement, it was helmed by what many considered the most difficult professor in the department, and it was named "Computer Organization", a phrase which at first glance doesn't seem to mean much of anything.

The course was all about how computers work at the lowest level, which turned out to be one of the most fascinating semesters of lectures I've ever been to. Taking us through the entire process of how computers were developed and how they work, we covered everything from atomic physical gates to abstract architecture to how to design an ALU.

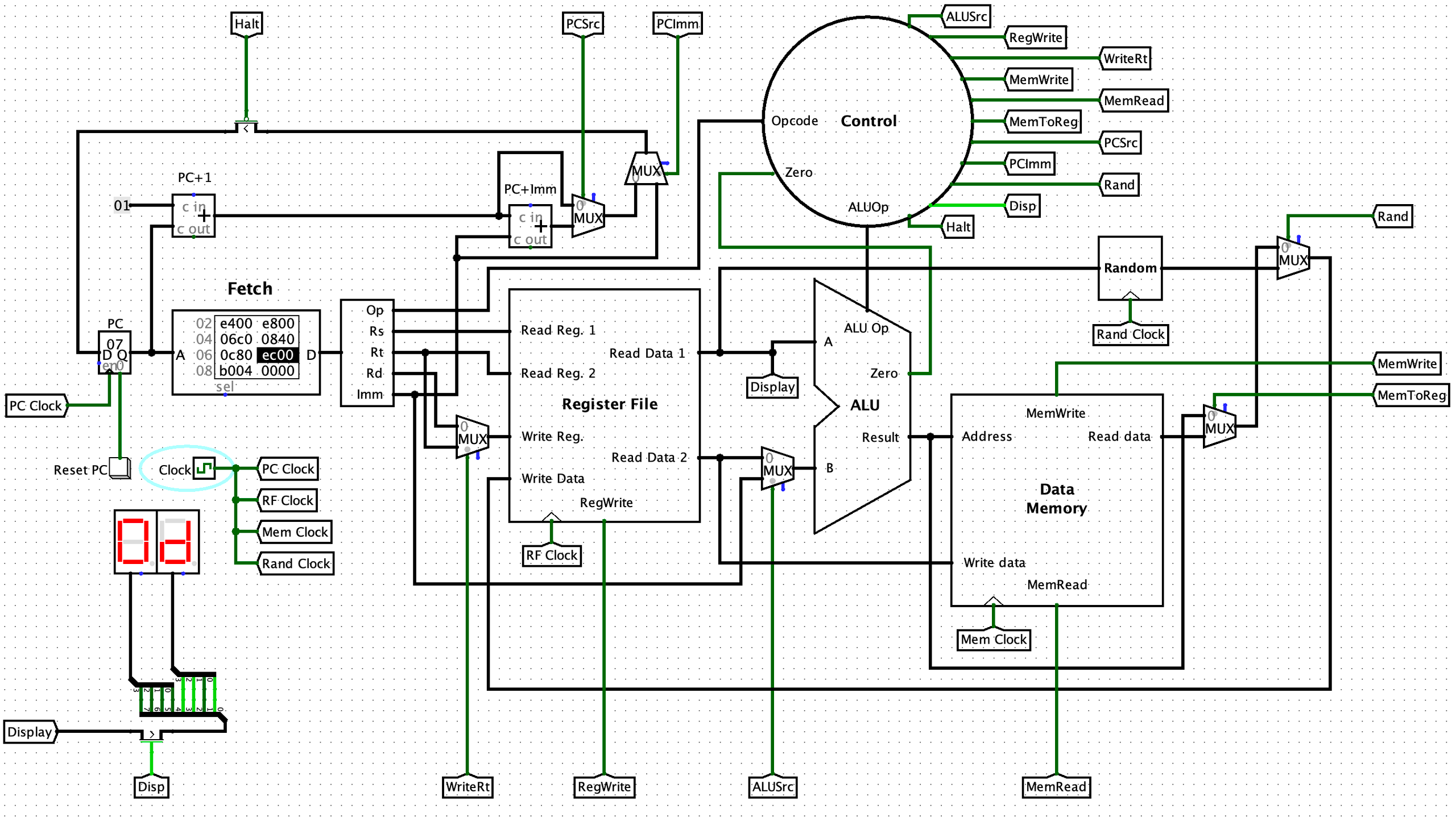

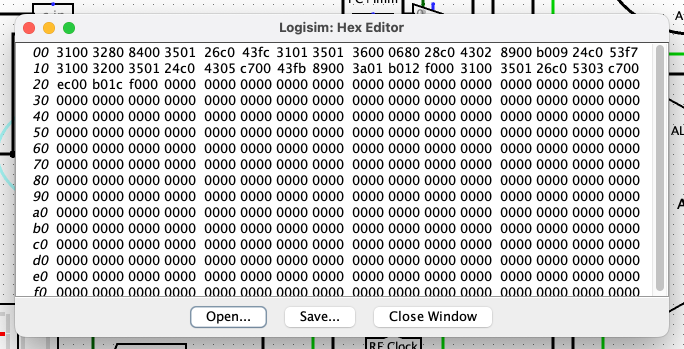

The final project was a culmination of all of this. We were to, as the spec put it, "design the simplest CPU that can do something slightly interesting," noting a minimum set of 16-bit instructions that our CPUs must handle. We were then supposed to think of "something interesting" for said CPU to do, and then code it in assembly. The assignment would be created and tested in Logisim, a Java-based tool for designing and simulating logic circuits.

Simulation

First things first: things that Logisim does for us. We get gates for free, obviously, since that's the point of the program. There's a module named Fetch that lets us store 16-bit words (optionally loaded from a file) and send them to the rest of the circuit, functioning as our program stored in memory. We get another RAM simulation for limited storage, a random number generator, some multiplexers of various sizes, and an output "screen" that can show two hex digits. It's practically luxurious!

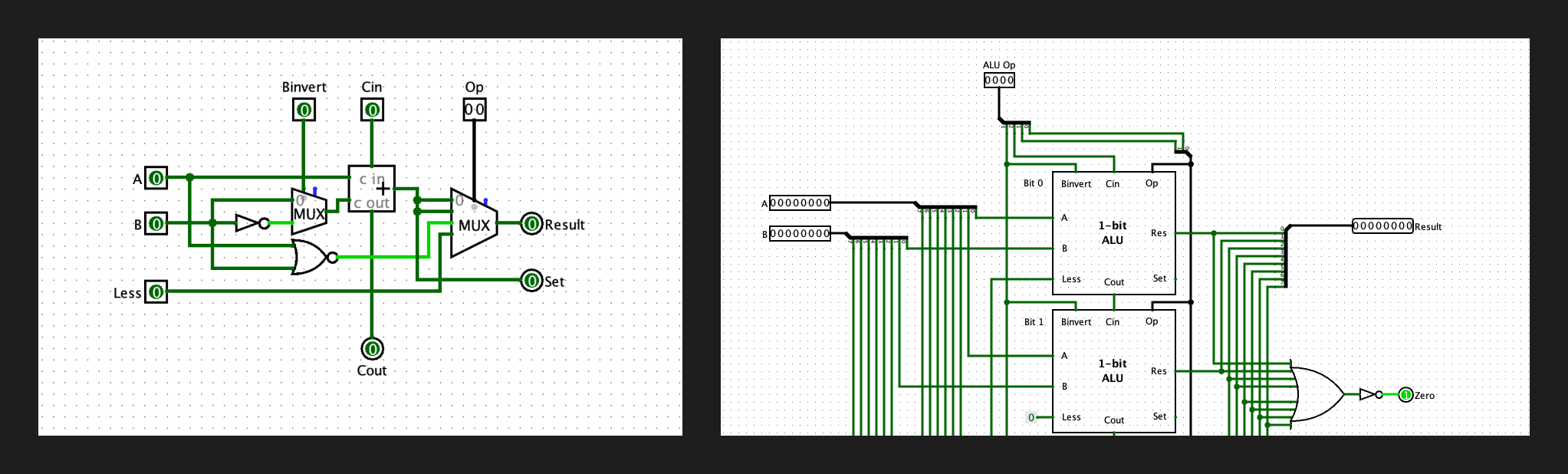

Let's start with one of the harder bits: the ALU.

The Arithmetic Logic Unit is the component of the CPU that does integer math. If the instruction says to add or divide or bit shift or NOR, it passes those numbers to the ALU along with an instruction that denotes the operation to perform. That result is then passed to the control module.

Speaking of:

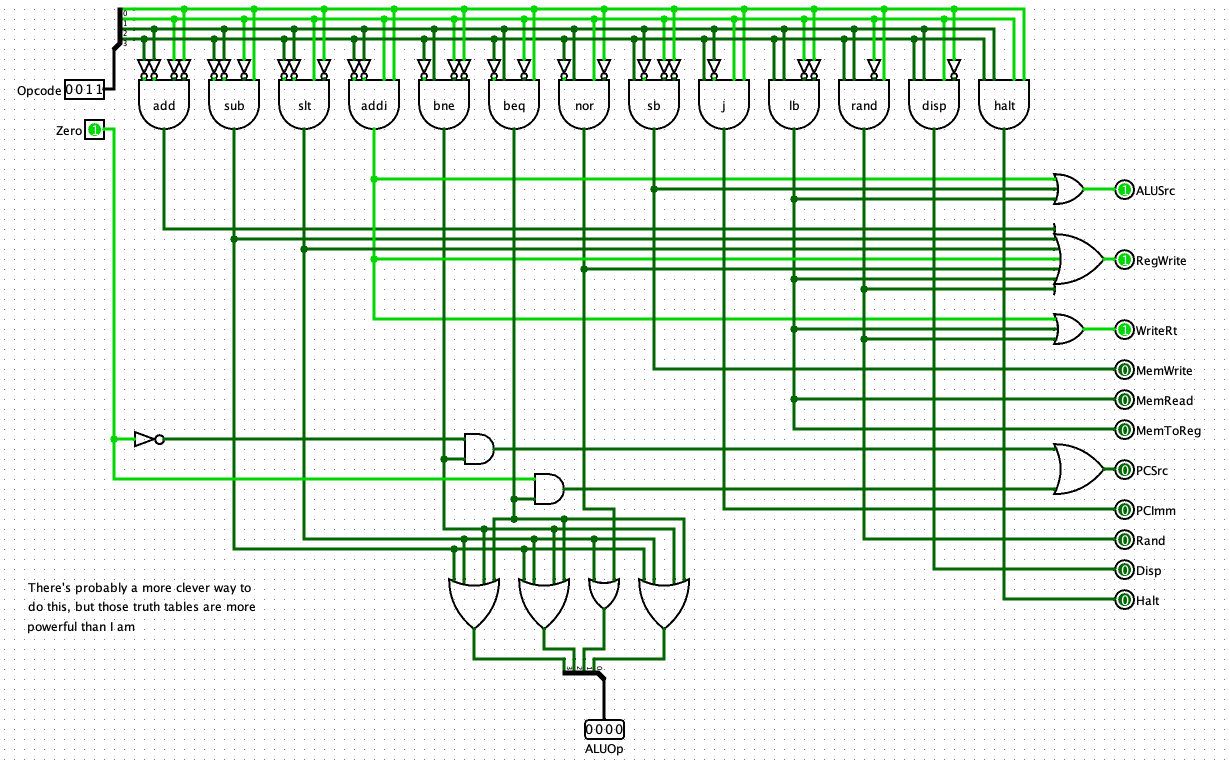

The control module takes in the actual operation code of each instruction and routes it, along with the ALU result, to the appropriate circuits. For example, if the instruction's opcode is to write the next word of data to memory, it sends a signal to the memory module to get it prepared for a write; or if the CPU needs to skip to a different instruction (if we're calling a function, for example), the program counter instruction will instruct that circuit to use the instruction's data as the next instruction address instead of PC+1.

A few items later, such as the register file (controlling low-latency volatile CPU storage), the program counter control, and approriately wiring instructions from the control unit, and we're ready to code.

Assembly

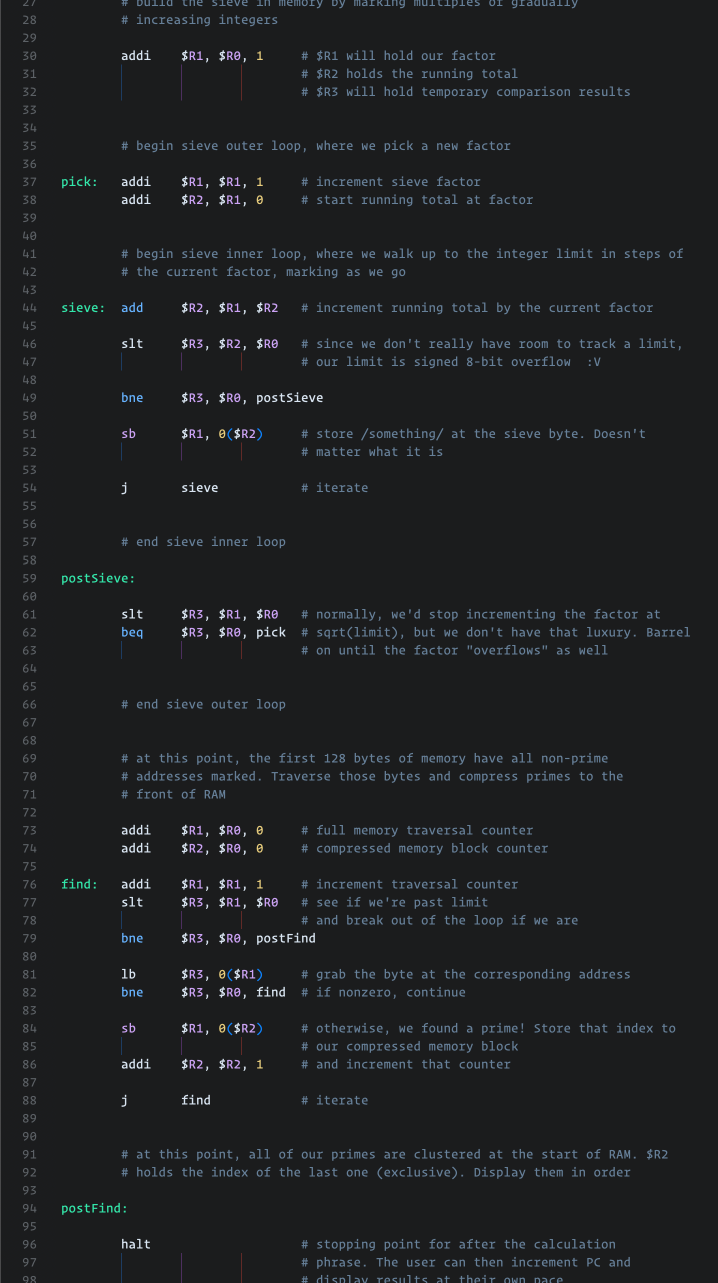

Since we had a preexisting specification for the assembly language we were to implement, the professor was able to create and provide us with an assembler, meaning we could skip that error-prone step and get to coding. We could write our (commented!) programs in a standard text editor, using named ASCII instructions instead of raw 16-bit binary words. Again, practically luxurious.

Our directive was to demonstrate any interesting program running on our CPUs, with the definition of "interesting" left up to us. The snag is that our CPU spec only included three registers, which is the way CPUs store words that persist between instructions. Limiting us to only being able to store three numbers in memory was an extremely interesting memory-juggling challenge. I made a few programs on my simulation, but my favorite was a prime sieve that produced the prime numbers in the range of 1 to 127.

Aside from that, other little programs included a dice roller, a Fibonacci number calculator, and a craps simulation. Assembly is a cool headspace to get into.

This was my favorite class of my CS degree, and computers feel even more like dark magic now than ever. Thanks, Dr. Misurda! If this ever comes up when you're searching your own name, I greatly enjoyed your class.